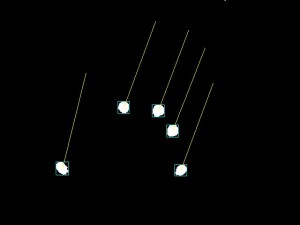

In my previous post we discussed using OpenCV to prepare images for blob detection. We will build upon that foundation by using cvBlobsLib to process our binary images for blobs. A C++ vector object will store our blobs, and the center points and axis-aligned bounding boxes will be computed for each element in this vector. We will define a class that operates on this vector to track our blobs across frames, converting them to an event type. An event will be one of three types, BLOB_DOWN, BLOB_MOVE, or BLOB_UP. At the end of this post we will generate the following result:

We will first declare an enumerated type to label our events in addition to a class, cBlob, to describe a blob. At this point cBlob would not need to be a class. When we tackle the detection of fiducials we will be adding methods to this object, so we'll leave it this way for now. We declare four point objects. Two of these will contain the origin and current location of our blob to define the tracking vector, and two will contain the extrema to define our axis-aligned bounding box. We declare a variable to define the event type and a flag for our tracking algorithm.

#ifndef BLOB_H

#define BLOB_H

enum { BLOB_NULL, BLOB_DOWN, BLOB_MOVE, BLOB_UP }; // event types

struct point {

double x, y;

};

class cBlob {

private:

protected:

public:

point location, origin; // current location and origin for defining a drag vector

point min, max; // to define our axis-aligned bounding box

int event; // event type: one of BLOB_NULL, BLOB_DOWN, BLOB_MOVE, BLOB_UP

bool tracked; // a flag to indicate this blob has been processed

};

#endif

The class, cTracker, will handle the blob detection and tracking. We will pass the binary image (OpenCV matrix object) prepared by our cFilter object to the trackBlobs() method. In cTracker we declare two instances of objects from the cvBlobsLib library, CBlobResult and CBlob. blob_result will store a list of detected blobs, and current_blob will allow us to iterate through that list. We declare a minimum area for filtering blobs and a maximum radius for tracking blobs frame to frame. We also declare some instances of helper functions available in cvBlobsLib to extract blob properties and an OpenCV IplImage to convert our binary image to the type required by the CBlobResult constructor. Finally, we declare two vectors of cBlob type for storing our processed blobs (current and previous frame).

#ifndef TRACKER_H

#define TRACKER_H

#include <opencv/cv.h>

#include "../cvblobslib/BlobResult.h"

#include "blob.h"

class cTracker {

private:

CBlobResult blob_result;

CBlob *current_blob;

double min_area, max_radius;

// instances of helper classes for obtaining blob location and bounding box

CBlobGetXCenter XCenter;

CBlobGetYCenter YCenter;

CBlobGetMinX MinX;

CBlobGetMinY MinY;

CBlobGetMaxX MaxX;

CBlobGetMaxY MaxY;

// we will convert the matrix object passed from our cFilter class to an object of type IplImage for calling the CBlobResult constructor

IplImage img;

// storage of the current blobs and the blobs from the previous frame

vector<cBlob> blobs, blobs_previous;

protected:

public:

cTracker(double min_area, double max_radius);

~cTracker();

void trackBlobs(cv::Mat &mat, bool history);

vector<cBlob>& getBlobs();

};

#endif

In our trackBlobs() method we first convert our binary image to an object of type IplImage. This image is passed to the CBlobResult constructor. We then apply a filter to exclude all blobs with an area less than our specified minimum. We then clear the blobs from two frames ago and store all the blobs that were extant in the previous frame, excluding those with event type BLOB_UP. We then iterate through our blob_result to extract the properties of the blobs in the current frame, setting the event type to BLOB_DOWN. We initialize our tracked flag to false for all blobs in the previous frame and enter our main tracking loop. We simply loop through all current blobs and search for a blob in the previous frame that exists within our specified maximum radius. If such a blob exists, we update the event type of the current blob from BLOB_DOWN to BLOB_MOVE and set the origin of this blob to either the location or the origin of the blob in the previous frame, providing us with a tracking vector starting from either the previous frame or the origin of the BLOB_DOWN event, respectively. Lastly, if there were any blobs in the previous frame that weren't tracked, we set the event type to BLOB_UP and push it onto the current frame's blob vector. Note that the complexity of this algorithm is O(n^2).

Additionally, we define a getBlobs() method for returning a reference to the current blob vector.

#include "tracker.h"

cTracker::cTracker(double min_area, double max_radius) : min_area(min_area), max_radius(max_radius) {

}

cTracker::~cTracker() {

}

void cTracker::trackBlobs(cv::Mat &mat, bool history) {

double x, y, min_x, min_y, max_x, max_y;

cBlob temp;

// convert our OpenCV matrix object to one of type IplImage

img = mat;

// cvblobslib blob extraction

blob_result = CBlobResult(&img, NULL, 127, false);

blob_result.Filter(blob_result, B_EXCLUDE, CBlobGetArea(), B_LESS, min_area); // filter blobs with area less than min_area units

// clear the blobs from two frames ago

blobs_previous.clear();

// before we populate the blobs vector with the current frame, we need to store the live blobs in blobs_previous

for (int i = 0; i < blobs.size(); i++)

if (blobs[i].event != BLOB_UP)

blobs_previous.push_back(blobs[i]);

// populate the blobs vector with the current frame

blobs.clear();

for (int i = 1; i < blob_result.GetNumBlobs(); i++) {

current_blob = blob_result.GetBlob(i);

x = XCenter(current_blob);

y = YCenter(current_blob);

min_x = MinX(current_blob);

min_y = MinY(current_blob);

max_x = MaxX(current_blob);

max_y = MaxY(current_blob);

temp.location.x = temp.origin.x = x;

temp.location.y = temp.origin.y = y;

temp.min.x = min_x; temp.min.y = min_y;

temp.max.x = max_x; temp.max.y = max_y;

temp.event = BLOB_DOWN;

blobs.push_back(temp);

}

// initialize previous blobs to untracked

for (int i = 0; i < blobs_previous.size(); i++) blobs_previous[i].tracked = false;

// main tracking loop -- O(n^2) -- simply looks for a blob in the previous frame within a specified radius

for (int i = 0; i < blobs.size(); i++) {

for (int j = 0; j < blobs_previous.size(); j++) {

if (blobs_previous[j].tracked) continue;

if (sqrt(pow(blobs[i].location.x - blobs_previous[j].location.x, 2.0) + pow(blobs[i].location.y - blobs_previous[j].location.y, 2.0)) < max_radius) {

blobs_previous[j].tracked = true;

blobs[i].event = BLOB_MOVE;

blobs[i].origin.x = history ? blobs_previous[j].origin.x : blobs_previous[j].location.x;

blobs[i].origin.y = history ? blobs_previous[j].origin.y : blobs_previous[j].location.y;

}

}

}

// add any blobs from the previous frame that weren't tracked as having been removed

for (int i = 0; i < blobs_previous.size(); i++) {

if (!blobs_previous[i].tracked) {

blobs_previous[i].event = BLOB_UP;

blobs.push_back(blobs_previous[i]);

}

}

}

vector<cBlob>& cTracker::getBlobs() {

return blobs;

}

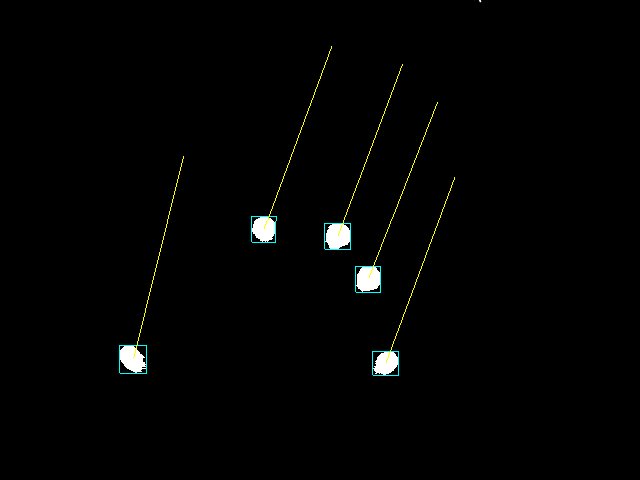

Here we will define a function to be added to our OpenGL helper functions. This function will take our blob vector and render the axis-aligned bounding boxes and tracking vectors.

void renderBlobs(vector<cBlob>& blobs, GLfloat r, GLfloat g, GLfloat b, GLfloat drag_r, GLfloat drag_g, GLfloat drag_b) {

glDisable(GL_TEXTURE_2D);

for (int i = 0; i < blobs.size(); i++) {

glColor4f(r, g, b, 1.0f);

glBegin(GL_LINE_LOOP);

glVertex3f(blobs[i].min.x, blobs[i].min.y, 0.0f);

glVertex3f(blobs[i].min.x, blobs[i].max.y, 0.0f);

glVertex3f(blobs[i].max.x, blobs[i].max.y, 0.0f);

glVertex3f(blobs[i].max.x, blobs[i].min.y, 0.0f);

glEnd();

glColor4f(drag_r, drag_g, drag_b, 1.0f);

glBegin(GL_LINES);

glVertex3f(blobs[i].origin.x, blobs[i].origin.y, 0.0f);

glVertex3f(blobs[i].location.x, blobs[i].location.y, 0.0f);

glEnd();

}

}

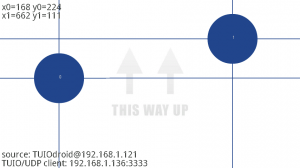

There are only a few modifications to be made to our main.cc file. We add a couple of constants, TMINAREA and TMAXRADIUS, create an instance of cTracker, and near the end of the main() function we call the trackBlobs() method and renderBlobs() helper function.

#include <SDL/SDL.h>

#include "src/filter.h"

#include "src/glhelper.h"

#include "src/tracker.h"

// capture parameters

static const int CDEVICE = 0;

static const int CWIDTH = 640;

static const int CHEIGHT = 480;

// filter parameters

static const int FKERNELSIZE = 7; // for gaussian blur

static const double FSTDDEV = 1.5;

static const int FBLOCKSIZE = 47; // for adaptive threshold

static const int FC = -5;

// display (native resolution of projector)

static const int WIDTH = 640; //1280

static const int HEIGHT = 480; //800

static const int BPP = 32;

// tracker parameters

static const double TMINAREA = 16; // minimum area of blob to track

static const double TMAXRADIUS = 24; // a blob is identified with a blob in the previous frame if it exists within this radius

//////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////// main

int main(int argc, char *argv[]) {

bool active = true, balance = true, fullscreen = false;

int render = 0;

cFilter filter(CDEVICE, CWIDTH, CHEIGHT, FKERNELSIZE, FSTDDEV, FBLOCKSIZE, FC);

filter.balance(balance);

cTracker tracker(TMINAREA, TMAXRADIUS);

SDL_Init(SDL_INIT_EVERYTHING);

SDL_Surface *screen = SDL_SetVideoMode(WIDTH, HEIGHT, BPP, (fullscreen ? SDL_FULLSCREEN : 0) | SDL_HWSURFACE | SDL_OPENGL);

SDL_Event event;

setupOrtho(WIDTH, HEIGHT);

glClearColor(0.0f, 0.0f, 0.0f, 0.0f);

GLuint texture;

setupTexture(texture);

while (active) {

while (SDL_PollEvent(&event)) {

switch (event.type) {

case SDL_QUIT:

active = false;

break;

case SDL_KEYDOWN:

switch (event.key.keysym.sym) {

case SDLK_f: // toggle fullscreen

fullscreen ^= true;

screen = SDL_SetVideoMode(WIDTH, HEIGHT, BPP, (fullscreen ? SDL_FULLSCREEN : 0) | SDL_HWSURFACE | SDL_OPENGL);

break;

case SDLK_b: // toggle balance

balance ^= true;

filter.balance(balance);

break;

case SDLK_1: // captured frame

render = 0;

break;

case SDLK_2: // filtered frame

render = 1;

break;

case SDLK_3: // gaussian blur

render = 2;

break;

case SDLK_4: // adaptive threshold

render = 3;

break;

}

break;

}

}

filter.filter(); // capture, filter, blur, (balance), threshold

switch (render) {

case 0:

glClear(GL_COLOR_BUFFER_BIT);

renderTexture(texture, filter.captureFrame(), false, WIDTH, HEIGHT);

break;

case 1:

glClear(GL_COLOR_BUFFER_BIT);

renderTexture(texture, filter.filterFrame(), true, WIDTH, HEIGHT);

break;

case 2:

glClear(GL_COLOR_BUFFER_BIT);

renderTexture(texture, filter.gaussianFrame(), true, WIDTH, HEIGHT);

break;

case 3:

glClear(GL_COLOR_BUFFER_BIT);

renderTexture(texture, filter.thresholdFrame(), true, WIDTH, HEIGHT);

break;

}

tracker.trackBlobs(filter.thresholdFrame(), true);

renderBlobs(tracker.getBlobs(), 0.0f, 1.0f, 1.0f, 1.0f, 0.0f, 1.0f);

SDL_GL_SwapBuffers();

}

deleteTexture(texture);

SDL_Quit();

return 0;

}

The updated Makefile:

all: main.cc lib/capture.o lib/filter.o lib/glhelper.o lib/blob.o lib/tracker.o g++ main.cc lib/capture.o lib/filter.o lib/glhelper.o lib/blob.o lib/tracker.o cvblobslib/libblob.a -o bin/main -L/usr/lib `sdl-config --cflags --libs` `pkg-config opencv --cflags --libs` -lGL lib/capture.o: src/capture.h src/capture.cc g++ src/capture.cc -c -o lib/capture.o lib/filter.o: lib/capture.o src/filter.h src/filter.cc g++ src/filter.cc -c -o lib/filter.o lib/glhelper.o: lib/blob.o src/glhelper.h src/glhelper.cc g++ src/glhelper.cc -c -o lib/glhelper.o lib/blob.o: src/blob.h src/blob.cc g++ src/blob.cc -c -o lib/blob.o lib/tracker.o: lib/blob.o src/tracker.h src/tracker.cc g++ src/tracker.cc -c -o lib/tracker.o -I/usr/local/include/opencv/ clean: @rm -f *~ src/*~ lib/* bin/*

In my next post we will continue to build upon this project by adding the ability to detect fiducials.

If you download this project, you will need to run make in the cvblobslib folder to generate the libblob.a file required to link this project.

Download this project: tracker.tar.bz2

Comments

Really nice work, but i am facing problems when i try to compile it. i ghelper.h

"src/glhelper.h:15: error: variable or field ‘renderBlobs’ declared void

src/glhelper.h:15: error: ‘vector’ was not declared in this scope

src/glhelper.h:15: error: expected primary-expression before ‘>’ token

src/glhelper.h:15: error: ‘blobs’ was not declared in this scope

src/glhelper.h:15: error: expected primary-expression before ‘r’

src/glhelper.h:15: error: expected primary-expression before ‘g’

src/glhelper.h:15: error: expected primary-expression before ‘b’

src/glhelper.h:15: error: expected primary-expression before ‘drag_r’

src/glhelper.h:15: error: expected primary-expression before ‘drag_g’

src/glhelper.h:15: error: expected primary-expression before ‘drag_b’

src/glhelper.cc:39: error: variable or field ‘renderBlobs’ declared void

src/glhelper.cc:39: error: ‘vector’ was not declared in this scope

src/glhelper.cc:39: error: expected primary-expression before ‘>’ token

src/glhelper.cc:39: error: ‘blobs’ was not declared in this scope

src/glhelper.cc:39: error: expected primary-expression before ‘r’

src/glhelper.cc:39: error: expected primary-expression before ‘g’

src/glhelper.cc:39: error: expected primary-expression before ‘b’

src/glhelper.cc:39: error: expected primary-expression before ‘drag_r’

src/glhelper.cc:39: error: expected primary-expression before ‘drag_g’

src/glhelper.cc:39: error: expected primary-expression before ‘drag_b’

make: *** [lib/glhelper.o] Error 1"

Please can you help me with this. any help would be appreciated.

-Pratik

Author

Hey Pratik,

I ran into the same issue you did. It seems my build environment somehow changed.

I've updated the tracker.tar.bz2 file, so you should be able to re-download and compile now.

The modifications I made were..

1. Updated the Makefile to reflect the proper location of OpenCV.. for my system I added -I/usr/include/opencv/

2. Updated glhelper.h, glhelper.cc, tracker.h, and tracker.cc to include the vector object from the STL and added the std namespace to the vector declarations.

Give it a try.. it should compile now, but let me know if it doesn't.

-Keith

Thanks for the help, the modifications did the trick.

i am also working on something similar for my final year project. But instead of doing difference of frame to detect the blobs we are using a much sophisticated method for background subtraction - mixture of gaussians. it has been implemented in opencv and gives better results than the frame difference method. (i can give me you my code if you want to)

you might want to try that. i wanted to ask you one more question, why are you rendering using opengl? isn't the built in opencv rendering good enough?

Author

Hey there.. glad that worked.

I would definitely like to check out what you've done. It sounds interesting. I've set up a simple uploader.. click here to use it.

I'm not familiar with everything OpenCV has to offer. I've put this project to the side for the time being due to frustration caused by hardware sensitivity.. or lack thereof. I was experimenting with manipulating 3D objects using the touch surface. Some of my latest projects are using the programmable pipeline. Does OpenCV have support for 3D rendering?

Uploaded the code.

Author

Cool.. I'll check it out later tonight. I'm not familiar with the Kalman filter, but it sounds interesting.

The method I used for calibration was based on barycentric coordinates. Let me know what you come up with.. and keep me posted on your project.

Hi, I am currently working on a motion recognition project which incorporates both openCV and C++. I have to create motion intensity images and do do this, I do a series of processing steps on a video of for example, a person walking. Firstly, I extracted every 5 frames of the video sequence since there is hardly any motion between this interval. I then grayscaled the frames and applied both a threshold and filter to eliminate noise in the frames so i currently have a binary video with blobs of both the human walking and other objects in the frame.

I would like to find the area of all the blobs in the frame. Since the human blob would have the greatest area, I would be able to use this value was my new threshold area value to extract only the human blob and mask all the other pixels in the image to 0. Is there an openCV function which would allow me to calculate the blob area?

Author

Hey Lucy..

My OpenCV is a bit rusty at the moment.. but I believe cvBlobsLib has a method to retrieve the area of a blob.

A quick search yielded this post to sort the blobs by their areas.. http://chi3x10.wordpress.com/2011/05/18/sort-blobs-generated-by-cvblobslib-by-areas/.

I'm not aware of any method native to OpenCV that will allow you to evaluate the area, but there may be.

Cheers.

hi i am using python 2.7.1 and open cv 2.2when i try to run ur code thru IDLE, i get the fwllooing errorImportError: numpy.core.multiarray failed to importTraceback (most recent call last): File G:/OpenCV2.2/samples/python/123 , line 5, in import cvImportError: numpy.core.multiarray failed to importPlease help me out

Author

Hi Eric.. I'm not too familiar with that environment. I don't think I can be much help. Let me know what you find.

Hi, I'm try your code with the Kinect out. Run fine after I changed one detail:

The start Index i=1 in line 30 (tracker.cpp) causes problems and I've decreased it to Zero and add an filter for big areas:

//i.e. max_area=12000

blob_result.Filter(blob_result, B_EXCLUDE, CBlobGetArea(), B_GREATER, max_area);

[....]

for (int i = 0; i < blob_result.GetNumBlobs(); i++) {

[...]

}

Regards Olaf

Author

Hey Olaf,

Thanks for pointing that out. If I recall, I was starting with index 1 to eliminate the large blob that typically gets assigned at index 0. After reading your comment, I realize that's not always the case. This code should be modified to include your correction. Thanks again.

I'm getting this error when trying to $sudo make your program, i'm using ubuntu 12.10. Do you have any idea on what it is? Thx

g++ main.cc lib/capture.o lib/filter.o lib/glhelper.o lib/blob.o lib/tracker.o cvblobslib/libblob.a -o bin/main -L/usr/lib `sdl-config --cflags --libs` `pkg-config opencv --cflags --libs` -lGL -I/usr/include/opencv/

lib/tracker.o: In function `cTracker::trackBlobs(cv::Mat&, bool)':

tracker.cc:(.text+0x2b8): undefined reference to `CBlobResult::CBlobResult(_IplImage*, _IplImage*, int, bool)'

collect2: error: ld returned 1 exit status

make: *** [all] Error 1

Author

Hey Vitor..

Are you using the same cvblobslib library that was in the download? If you descend into the cvblobslib folder and perform a make clean; make .. does it compile okay (no warnings or errors)?

I haven't messed with this project in a while, but I just tried downloading and compiling/linking and everything went smoothly. I'm stumped.

Let me know if you come up with anything.

Keith

Thank you for the quick reply. I used the make command on the cvblobslib folder and it worked. I really like you'r code.

I'm trying to code a car couting program and i'm having problems at tracking blobs, i have been able to identify then but i cant track it, i'm using cvbloblib. I'm trying to get a better insight at you'r tracking code and see if i use the idea to solve my problem. Do you have any other tips to track blobs troughout the frames?

Great website, thank you.

Author

Cool.. glad it's working.

Olaf (comment above) pointed out a bug in this project. I don't think I've ever modified this project to correct it. You might take a peak at that if you haven't already.

I have a post up on implementing the Connected Component Labeling method which, if I recall, is the method cvBlobsLib uses.. but that post provided an alternative to using cvBlobsLib. You might take a peak at it here. That project has a bit more to do with detection than tracking though.

I'm a bit rusty on this stuff, so I'm not sure I can give you any other pointers. Let me know if you get a web page up or something.

Hello,

I'm doing a project involving eye detection and tracking, and I guess the blob detection method will do the job. But i'm very new to Ubuntu and OpenCV and hence having a lot of troubles in installing components and compiling basic codes. I have installed cvblob as directed in http://code.google.com/p/cvblob/wiki/HowToInstall

When I compile programs, these are the errors I get:

error: ‘CBlobResult’ was not declared in this scope

error: ‘CBlob’ was not declared in this scope etc...

and a similar error for all functions used.

I think the header file cvblob.h is not linking properly.

Please help.

Author

Hey Suraj..

I just reviewed what I did with this project.. I'm including the BlobResult.h header file. I appear to be using both the CBlob and CBlobResult objects same as you. You might try including the BlobResult.h header file in your project, and see if that does the trick. It's hard to say without seeing your code, but it sounds like you just need to find the right header file.

How to i make this run on windows, VS++ 2010? I have cvbloblib complied.

Author

Hey Pedro..

I'm not very familiar with Visual Studio.. I suspect you would need to start with setting up your build environment.. converting the

Makefileas necessary for VS and linking to your OpenCV and SDL libraries. ..not sure I can offer you much insight.Hi Keith,

Thanks for presenting such a nice blog to the new computer vision developers like me.

I am working on Vehicle Detection in night time. The program is working fine, there's only one small issue that if the two cars are approaching together with tightly coupled than the detector is treating both the car as one car.

I saw your program but its only detecting single blobs. Here, I want to detect both the pair as one i.e. Headlight pair of car as one.

Please give your expert comments.

Thanks in advance

Thank you for this nice tutorial.

I have already tried cvbloblib to track stereo blobs, and it is very usefull when using in mono vision , but if you use in stereo vision the blobs ids don`t match every frame so you must add a stereo matching algorithm. So i am finally using classic binary image +findcontours + kalmam + stereotriangulation function from opencv for that pourpose.

Also it would be nice to a have a cuda version of it .

Again thank you for this good code.

Author

Hey Alvaro,

That sounds like an interesting project. Let me know if you have a link to it. Thanks for the feedback!

Thanx for the code.

I'm working on to build my own multi-touch table.

I just want to know which codes from your site 'll be helpful for me.

And how to get the input to then mouse event.

thank you

Author

Hey dhawas..

My software doesn't interface with the mouse. I think Linux has some built-in multi-touch support, but I'm not sure what the status is on it. This post and the previous post might be somewhere to start if you are looking to write your own software. You might also have a look at this link.

GOOD work) but i am facing problems when i try to compile it

g++ main.cc lib/capture.o lib/filter.o lib/glhelper.o lib/blob.o lib/tracker.o cvblobslib/libblob.a -o bin/main -L/usr/local/lib/ `sdl-config --cflags --libs` `pkg-config opencv --cflags --libs` -lGL -I/usr/local/include/opencv

/tmp/ccHBPfGq.o: In function `main':

main.cc:(.text+0xe3): undefined reference to `cTracker::cTracker(double, double)'

main.cc:(.text+0x398): undefined reference to `cTracker::trackBlobs(cv::Mat&, bool)'

main.cc:(.text+0x3a7): undefined reference to `cTracker::getBlobs()'

main.cc:(.text+0x40f): undefined reference to `cTracker::~cTracker()'

main.cc:(.text+0x443): undefined reference to `cTracker::~cTracker()'

collect2: error: ld returned 1 exit status

can u help me?